I’m developing an application that might be useful to your “vision” problem: PacmanVision.

This basically let’s you manipulate a stream of pointclouds from rgb-d sensors, online, it works with the kinect one, through iai_kinect2. With that, you can make a precise cropping, removing the environment from the scene, then assuming the object is on a table you can also remove that with plane segmentation. After that you are left with a pointcloud that only contains a view of your object. You could save it to a pcd file, then make another scan from a different viewpoint then save it again and so on …

When you have enough scans you could try to register them together with PCL - Registration Library and obtain a single point cloud containing the complete model of your object. With that you can also build a mesh out of it, for example with [Meshlab] (http://meshlab.sourceforge.net/) and BOOM you have an STL for your URDF model!

However all this process is easier said than done and it would probably require a lot of effort, but still it could be a nice starting idea !

@Tabjones that sounds interresting. i read a little bit about PacmanVision and my first thoughts are, that i cannot build a single-pointcloud of the whole finger, because when the finger moves from open to close, it changes its geometry, and then the single pointcloud would not match, or?

But i could make pointclouds of every phalanx, which is different in size and a little bit in shape. Perhaps then i could detect all of these, but i dont know how good the kinect one is at detecting pieces which have the shape of a 8mm tube and some centimeters long ? Perhaps you got some experience in that ?

@flobotics no you should not change the hand configuration while you are scanning you must assume the body is rigid, or you’ll have nightmares trying to register each pieces. Although non rigid reconstruction is possible: DynamicFusion that level of awesomness is still sci-fi for my level of expertise!!

That said, yes you could scan every single pieces and assemble an URDF out of it, I have some experience in that, tried to make an ikea kitchen object database in the past: Object-DB, built with a kinect and a simple turn table. However, 8mm is probably too small for the kinect to be accurate enough and produce a decent model. So you’ll have to move to laser scans or other very expensive 3d sensors… so maybe that is not the correct way.

I think you mentioned you 3D printed your pieces, so you must have the CADs or meshes already, so why not use them directly into your URDF model ?

@Tabjones No, i dont mean, changing the finger configuration while scanning for a single pointcloud. I mean, when i live-scan it to detect the actual angle of every phalanx while moving.

hmm, 8mm are too small, that does not sound good.

i got stl files, thats not what i was archiving. i am looking to map the exisiting stl/urdf file into the pointcloud, and then i could extract the joint angles. that where my thoughts

but thanks anyway, i will look at PacmanVision and test something, just to get a feeling what goes and what not.

@flobotics, oh ok I misunderstood what you were trying to achieve, what you want is more complicated i think! At least if you want to do it online. One way could be to markerize each phalanx then track the markers, since you know were you put the markers, with respect to the phalanx, you can measure the joint angles with some math. There’s a Ros package for tracking markers in case you dont already know: http://wiki.ros.org/ar_track_alvar, but still 8mm is probably too smal for any marker !

If you dont wat to do that, another way could be to try a model based matching, i.e. find an instance of your stl phalanx on the point cloud and measure it’s pose, then extract the angles, but this could be harder.

In any case my program could still be useful, so just let me know if you have troubles running it and i’ll help you. good luck!

@Tabjones i installed pacman vision, then i run roscore, kinect_bridge and then pacman vision, but i only get a black screen ?

@flobotics, Rviz fixed frame “qb_delta_base” is wrong and comes from my setup, it probably got uploaded by mistake in the default configuration … OPS. Just change it in rviz to kinect2_bridge reference frame, which i believe is “kinect2_rgb_optical_frame”.

Then in the pacman_vision gui click on the Point Cloud Acquisition Tab (Yes it’s a tab, I should probably change the layout!) and in there select the subscriber to the kinect2 topic. It should work after these steps.

When you start seeing something you can apply the filters you want by clicking the relative buttons, later on you can save the current configuration (save current configuration button) so you can reload it next time you start.

@Tabjones thanks , now it works and it works great. As you can see, there is a pointcloud of the finger to see, which i think is great. Because i have just with 25 pictures the kinect_bridge calibrated, and the second thing is, the finger is round, so i think, the TOF beams will be reflected by the wrong angle, so the kinect could not see it. Will check that in next time,

But i think it will be possible to extract the angle, also i will check ar_track_alvar, which could also help me, thanks

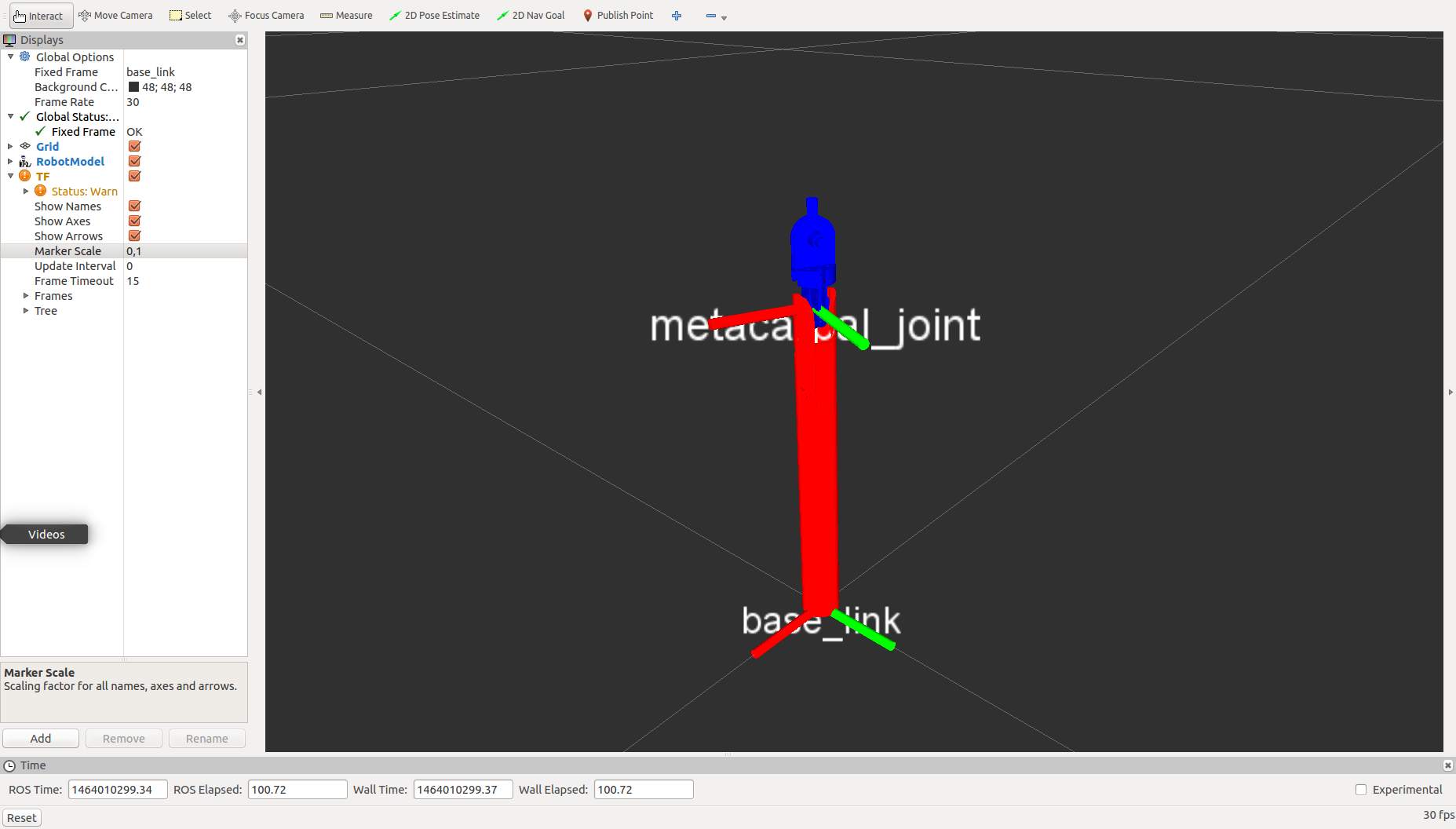

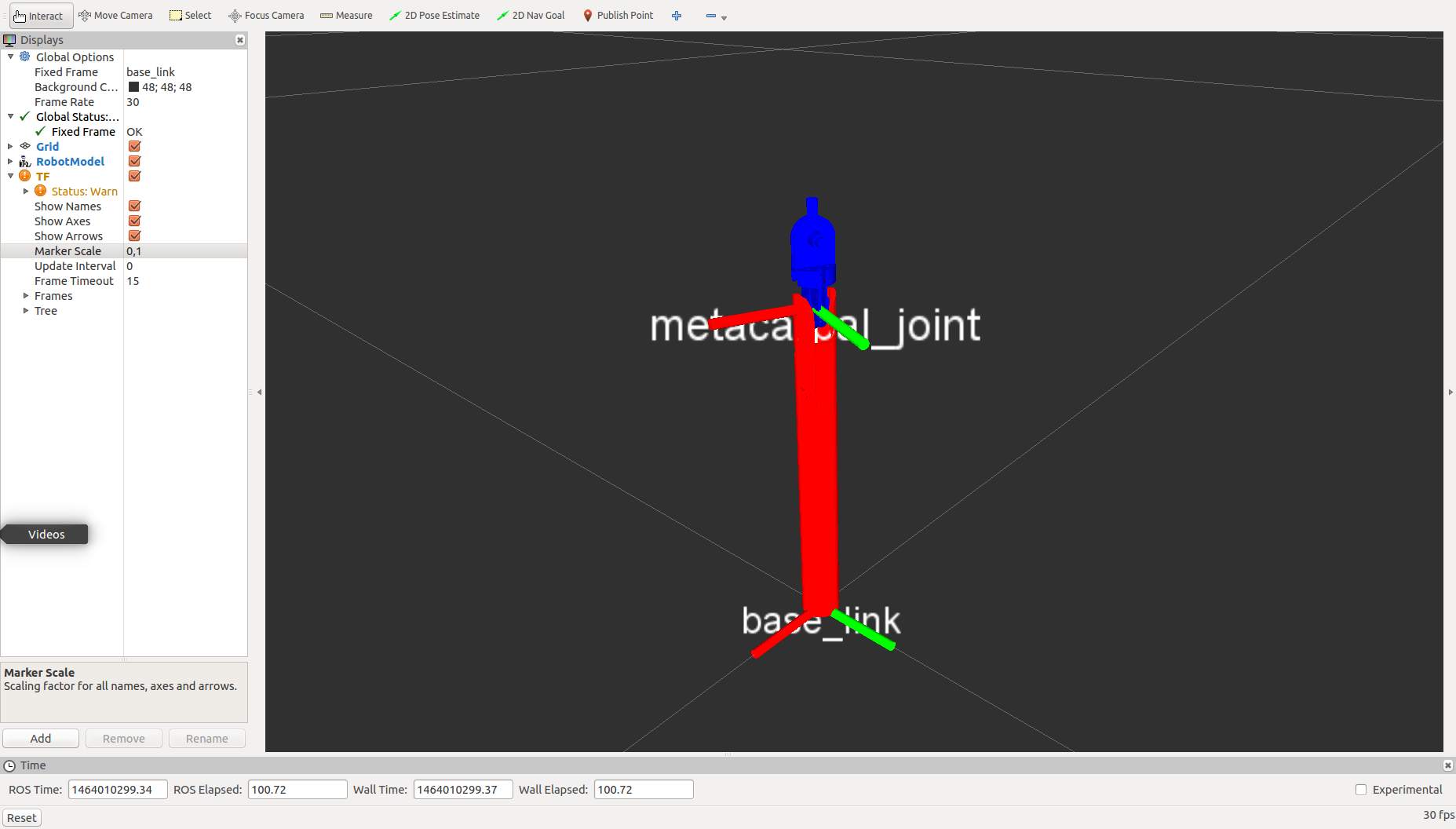

i installed ar_track_alvar, sticked two individualMarkers onto the distal and intermediate phalanx and here is the output

i stil got problems with detecting the markers with the kinect2, and if they are detected, they dither around. I need to re-calibrate the kinect2, then i hope it gets better, but for angle detection it looks good.

kinect2_bridge with only 2fps is bringing all 4 cpu over 70%, not good

Hints are always welcome.

@flobotics, good progress!

A few comments:

- Markers will always flicker (at least with a kinect) no matter what you do, however the error you have is often negligible for most applications and acceptable given the relatively low cost sensor you are using. Plus I don’t think that recalibrating the kinect will improve the quality of markers detection, so I wouldn’t waste time on it.

- You could build a mean average filter or a kalman filter to stabilize the flow of transformations from the markers, but still i found that the error committed is acceptable and building such a filter is often not worth it.

- The kinect2 bridge is slow, I dont know your pc specs, but you should use the opencl/cuda registration, i get a steady 30hz with that and the qhd topic. Hd topic is always too slow for my taste ~10-12hz so i tend not to use it.

@Tabjones my calibration of the kinect2 is now better, but my pc is running on 100% when i do kinect2_bridge with more than 2fps, and when i then wanted to run something else, like a tensorflow network, that does not work anymore.

i watched some youtube videos of ar_track_alvar, and it seemed to flicker a little bit always, but like you said, perhaps a little bit filtering and its ok.

i tried it with opengl opencl, but no changes in speed on my pc.

update: ar_track_alvar is using 100% cpu alone on my computer, is this correct ?

printed some cubes with clips, so i can attach it to the finger. The cubes have different long clips, so that they not get in contact when the finger is moving.

when they are attached to the finger, it looks a little bit funny

now i need some more ar-markers and then running ar_track_alvar again

i installed visp_auto_tracker and the first results are that it is more stable than ar_track_alvar and it consumes only 80% of my 4 cores.

https://www.youtube.com/watch?v=5xYyCqz5Ruc

the bad things are, that it does only recognize this big qr-code out-of-box and only 40cm infront of the camera and that the detected pose is not correct.But the visp online videos show better results, hope i get this.

You can also check chilitags, https://github.com/chili-epfl/chilitags It is based on opencv and has quite good performance. I use it on Nao robot.

in the first visp_auto_tracker test i printed a wrong qr-code (not square, no good borders). I printed it new , but it does not get detected good, even it is of this paper-size

https://www.youtube.com/watch?v=2WJsd6y7dTo

perhaps there are some more options i can find.

@OkanAsik thanks, that looks cool. You also used very small tags in your videos, what is the smallest size it can detect ? Is 1 - 2 cm border size, big enough ?

@OkanAsik i installed chilitags and ros_markers, without doing any configuration it runs very well. My CPU is running on 100% but the detection is still good

https://www.youtube.com/watch?v=5ecTl4JUoIU

Is there a way to use another style of markers in rviz ? I want to glue 5 tags on a cube, and then there are a lot of these markers on a tiny place, and then its bad to see.

good software, thanks for the tip

after hours and hours of testing i got a markers_configuration_sample.yml file together which represents a cube with 5 tags. The config is not really correct i think, but it works so far. If someone could help me here, would be nice. Here is the cube with chilitags. The cube has 20x20mm side-lenght and the black border of the tag is 13x13mm.

my config of this cube for chilitags is :

distal_phalanx:

- tag: 15

size: 13

translation: [0., 0., 0.]

rotation: [0., 0., 0.]

- tag: 3

size: 13

translation: [16.5, 0., -3.5]

rotation: [0.,-90., 0.]

- tag: 4

size: 13

translation: [13.0, 0., 13.0]

rotation: [0., -180., 0.]

- tag: 2

size: 13

translation: [-3.5, 0., 16.5]

rotation: [0., 90.0, 0.]

- tag: 14

size: 13

translation: [0., -3.5, 16.5]

rotation: [-90.0, 0., 0.]

And here a little video, consider that my cpu is running on 100% and i use the chilitags camera intrinsics, not the one of the kinect. (next thing todo)

https://www.youtube.com/watch?v=YHrjVUnd9T0

i created a second cube and config, now i got two tf-frames from chilitags.

https://www.youtube.com/watch?v=lwT_od_mcyg

How can i use these two tf-frames from chilitags for my urdf model ?

The urdf model consist of two bodies and one joint, like in the video the two cubes have also one joint, but is there a tool or anything that i simply can use the chilitags-tf-frames-info for the urdf-tf-frames ? tf_echo to joint_state and then publish this to robot_state_publisher ? Perhpas the moveIt! people use qr-tags on robot arms and then do something the same as i want ?

Didn’t found any tools for that, so i wrote a node which gets the angle of the two tf-frames of the chilitags cubes and publishes them to /joint_states.

https://www.youtube.com/watch?v=O6Pre6iVmo8

The devel branch of iai_kinect2 supports now rgb-only streams without depth, which reduces my cpu load from 95% to 60%, but the chilitags detection is still flickering ?

Bought a logitech c525 webcam to lower my cpu load and it does, running everything is now about 20% cpu load. I build a setup to learn a neural network.

https://www.youtube.com/watch?v=AtFYajQjPYA

As input i got 2 force values for every wire-rope (one wire-rope to pull to the left and one wire-rope to pull to the right), 2 velocity values for the servos, and the actual pose. The goal pose is then the goal to go.

When the computer does a path-planning before with OMPL or so, the neural network could get its loss/costs from the program itself, supervised learning whereby the computer itself is the supervisor  The output should be two servo velocity values.

The output should be two servo velocity values.

Has someone experience in something like that ?

I write some tensorflow code with ROS, perhaps someone is interrested to help/look

https://github.com/flobotics/flobotics_tensorflow_controller